What can Elon Musk's new Grok 3 (xAI) do?

Elon Musk, the star of SpaceX, Boring Company and DOGE, launched the AI service Grok 3. He promises us "The smartest AI on Earth".

The presentation talked about the capabilities of xAI, the absence of censorship and tests.

However, the star of the first part of the show was not the AI itself, but the "Colossus" - a giant cluster of 200,000 video cards that provides training for Grok-3. This is truly the largest mining farm, if you can call it that.

The system was created in two stages:

- 122 days of synchronous training on 100,000 GPUs

- 92 days of scaling to the full 200,000.

But when xAI moved on to demonstrating the capabilities of Grok-3 live, the presentation was more like a game of catch-up than innovation. AI tools such as ChatGPT, Claude, Gemini, Perplexity and the Chinese startup Deepseek can do the same thing. No one is surprised by code generation, image generation and internet search coupled with the "reasoning" mode of a neural network.

But the market managed to react. According to crypto.news, Grok tokens have grown by more than 30,000%. By the way, these meme coins have nothing to do with Musk's project.

Grok 3 vs. ChatGPT in practice

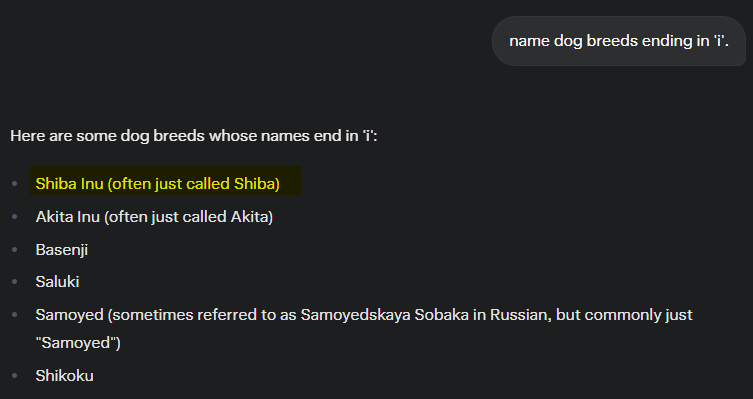

To test its capabilities, we started with a simple query, asking Grok 3 to name dog breeds ending in "i".

At first, it failed by listing breeds that didn't meet the criteria, including Shiba Inu. However, after switching to "Thinking" mode, the response improved, and the model correctly identified the dog breeds.

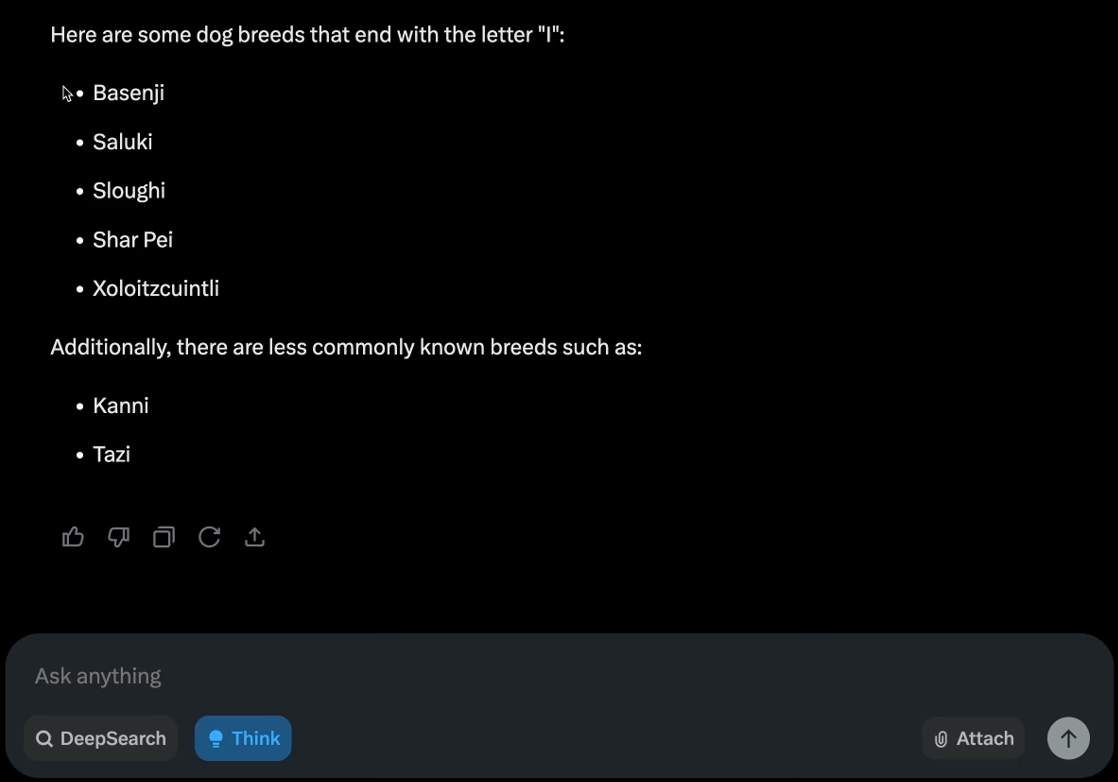

Next, we tested Grok 3's code generation ability and compared the benchmark to ChatGPT. We asked it to create a game combining Snake and Space Invaders.

The code generation speed was impressive — the model worked noticeably faster than ChatGPT. However, when we ran the code, the result was disappointing: only a block on a white background appeared on the screen.

At the same time, ChatGPT generated fully functional code that matched the request.

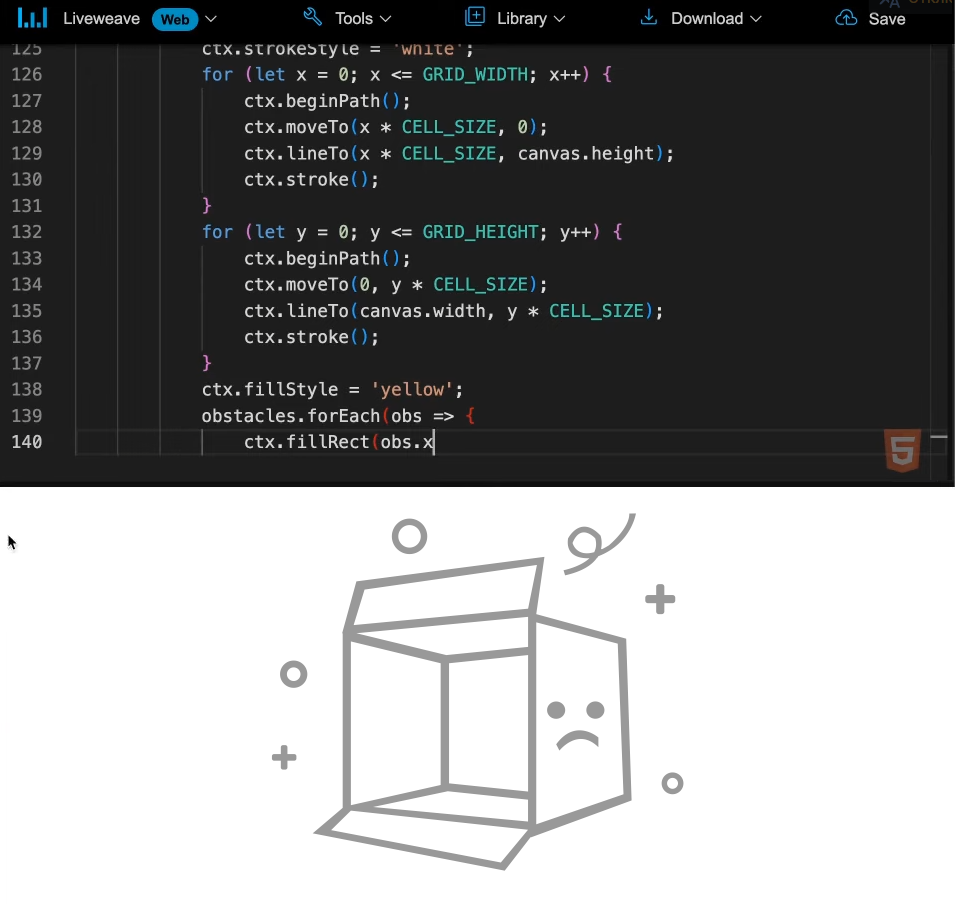

On the contrary, Grok 3 is certainly better at generating images than ChatGPT. In xAI they are more realistic and less cartoonish.

We also tested Deep Search, a Grok 3 feature similar to ChatGPT Pro's or Perplexity AI's web search mode.

The results were quick but uneven. Grok 3 retrieved relevant posts from its database and external sites, but some links were out of date.

Bottom line: Grok 3 shows promise for logic tasks, but its handling of code, content, and search needs some work. While ChatGPT and Perplexity are currently leading the pack, the model could become competitive over time.

Additional Features

In addition to web search, images, and reasoning mode, Grok 3 has the following features:

- real-time access to information via the X platform.

- can answer questions with wit and humor, providing useful and insightful answers.

Neural Networks Still Can't

It's worth remembering that despite the hype around AI models, none of them have been able to solve fundamental problems:

- Hallucination of answers (issuing non-existent in the reality of things).

- Database: lack of information and loss of meaning when training models.

- A lot of noise - models automatically download data from the Internet without checking its reliability.

- Scaling. Training LLMs costs millions of dollars, while efficiency gains are often limited to just 1%.

Despite fundamentally unsolved problems, AI networks continue to merge with cryptocurrencies.

The relationship between cryptocurrency and neural networks

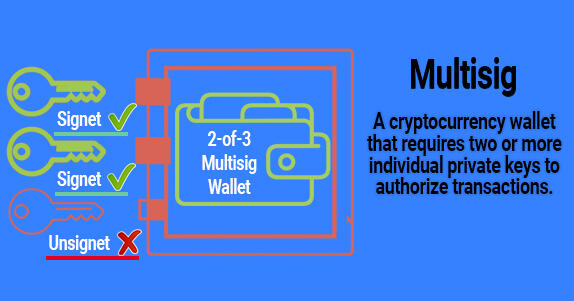

More and more projects that combine blockchain and artificial intelligence (AI) technologies to create a decentralized digital economy where economic agents (AEAs) operate.

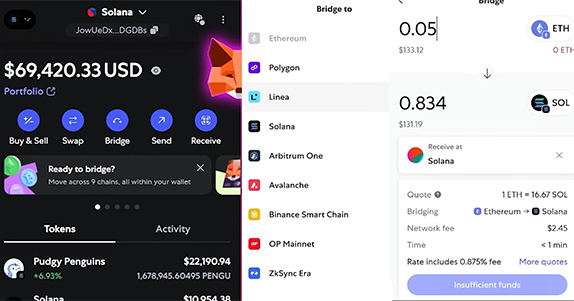

AI agents or bots have become the hottest new thing in the crypto space. They help with the entire blockchain workflow, from executing transactions to providing real-time market analysis.

With thousands of crypto AI agents already deployed, we will see even greater advances in agent-centric blockchain development in the coming years.

As with blockchain, while the “scale race” still dominates the industry, the future is about optimization, not endless scaling.

Even now, a combination of new architectures, algorithms, and layers allows you to achieve acceptable efficiency without spending millions.

The key trend is the balance between the size of the model, energy consumption and practical benefits.